LinkedIn Job Posts Analysis

Get insight into what it took to make this project a reality.

4/14/20235 min read

You can see the live interactive dashboard on Tableau Public here.

This project was one of the first I embarked on when I began building my portfolio. I conducted some research on what types of projects are best to include in a data analytics portfolio. One of the projects that consistently landed on the lists of examples I found in my research was web scraping. While having some training with Beautiful Soup I'd never completed this type of project or used web scraping throughout my career as I typically work with proprietary data.

The question became what type of data did I want to scrape? I stumbled across some projects on scraping job posts data from sites like LinkedIn and Indeed. I thought this would be a perfect project for me as I could actually use the data to assist in my job search.

The project takes shape

As I began writing the web scraping script in python I decided to focus on scraping job posts from LinkedIn as this is my preferred job search platform. I also decided that I would focus primarily on writing a python script only and export the scraped data into Excel and would simply use the data to help direct my search. I could sort the spreadsheet of job posts that meet my search criteria by the newest opportunities posted with the least amount of applicants and prioritize applying to these positions.

With this target in mind, the data points I would need to capture were relatively simple: job title, company name, location, post date and post link. While writing the script I bounced back and forth between a Beautiful Soup and Selenium approach. Selenium allowed me to login and get higher quality posts but became unsustainable as LinkedIn's platform recognizes a bot and will throw a captcha at login after a few runs of the script. This made development very difficult.

A setback in my plans

I was still able to produce data using a guest search URL that did not require login, however when I reviewed the job posts from this guest login I felt that the results were different from the results when a user logs in to their LinkedIn account to conduct a search. The guest search URL seems to provide a user with less up-to-date job posts often including posts from recruiters and for contract positions. There were some high quality posts included in this data just not as many. I felt that this created an issue with my initial plans of using this data to direct my job search and decided to focus on other projects at this point feeling that this project might be a dead end.

Pivoting to a new direction

While working on other projects I continued researching data analytics portfolios to help shape my projects and to get ideas for new projects. One of the resources I used extensively was a self-titled YouTube channel by the name of Luke Barousse, https://www.youtube.com/@LukeBarousse. In one of Luke's videos he showcased a project from one of his subscribers that analyzed the skills and education requirements for positions in data fields. You can see this project at skillquery.com.

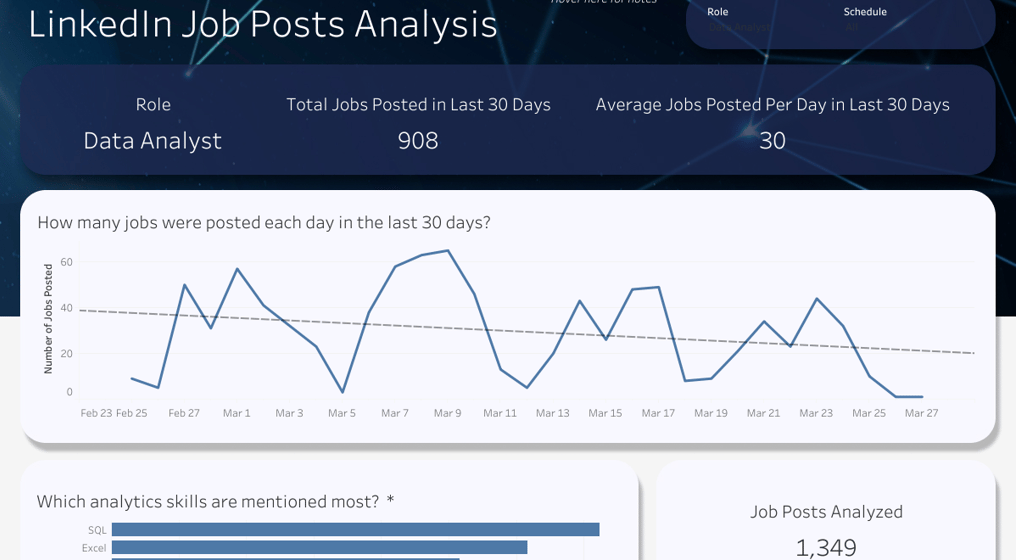

I felt inspired by this project and decided I could put my own unique spin on it by focusing this type of project specifically on the needs of my own personal job search. I could add some additional visualizations to the skill query project that would show the numbers of jobs being added over the past month to act as a key performance indicator (KPI) for the performance of the job market. By focusing on the data specifically in my geographical search area and remote jobs it would be more applicable to my job search as well.

Inspiration leads to success

Rather than using the web scraped data in a spreadsheet to inform which job posts to prioritize, I had decided I would use a dashboard to act more as barometer on job market performance for positions that meet my own personal criteria. From this point the project really took off. I setup a local version of PostgreSQL and cleaned up the python web scraper I had written previously. I also added additional code to the script capturing more information that would feed my dashboard. The additional data would include scraping the entire job description and scanning it for specific key words to identify popular skill requirements for the positions I was searching for.

Moving forward the project went very smoothly. I finished the scripts, writing multiple versions to capture data on different job titles and for work from home or in-office positions. I began running these scripts nightly via Windows Task Scheduler and populating my database with valuable data.

After roughly a week of these scripts running I began building in Tableau. At this point I experienced another obstacle. The way I had setup the tables in the database did not flow well into Tableau and didn't allow for dynamic ranking for the skills visualization I was trying to build. I had to go back a few steps and decided to write another python script for data transformation to get the data into the correct format that would work better in Tableau.

Launching the dashboard

Working in Tableau Public is less than ideal as it's a free version of the platform and less feature rich especially from a data source perspective. However, I was committed to show casing my ability to use business intelligence platforms like Tableau to demonstrate my range of skills. I ended up needing one final python script to achieve end-to-end automation of data flows as Tableau Public doesn't allow for connecting to databases. I was able to export my data into spreadsheets saved in a Google Drive and connect to these spreadsheets as my data source and Tableau Public would refresh the data source nightly via their Google Sheet connector included with Tableau Public.

The results

My dashboard is constantly fluctuating but it's been a real asset in helping to shape my expectations throughout my job search. I now know that it appears the job market is on a slow decline but that there is still plenty of opportunity available in the market with an average of approximately 28 new job opportunities being posted posted daily (as of writing this blog post). I know that SQL is one of the most important skills to employers, which has helped shape my portfolio development decisions, leading to my working with AdventureWorks data to show case my SQL skills.

Ultimately, this dashboard has become a daily visit for me when I sit down at my desk every day. It's a project that I'm very proud of and have put a significant amount of work into. My greatest take away from the experiences around this project was that the most important thing when developing a project like this is forward momentum. You might not always be going in to right direction, but as long as you're making some type of progress that's geared toward progressing in some sort of generally valuable direction, it's likely your project will finally make it's way to where it needs to be.